Is Deepseek Safe to Use? A Look at Privacy, Security, and Vulnerabilities

Introduction: Is Deepseek Safe to Use in the High-Stakes World of AI and Finance?

The artificial intelligence landscape is evolving at breakneck speed. Powerful new tools emerge constantly, promising unprecedented efficiency gains, enhanced decision-making, and significant competitive advantages. Deepseek, a prominent AI research lab and its associated applications, has generated considerable buzz, particularly for its impressive coding and language model capabilities. But is Deepseek safe to use? That’s the core concern for C-suite executives, discerning investors, and high-net-worth individuals entrusted with sensitive data and high-impact decisions.

The allure of advanced AI capabilities must be weighed against potential privacy risks and cybersecurity vulnerabilities. Adopting any new technology, especially one handling potentially vast amounts of data like an AI app, requires rigorous due diligence. This is particularly true for tools originating from regions with different data privacy regulations and geopolitical considerations. This post provides a balanced assessment to help you understand the potential benefits and inherent risks associated with using data on the Deepseek platform, enabling informed strategic decisions.

For a breakdown of this latest blog post? Listen to this discussion where experts dive into its key points. 🎙️Listen here

Understanding Deepseek AI: Capabilities and Origins

Before assessing safety and asking yourself is deepseek safe to use, it’s crucial to understand what Deepseek is. Deepseek isn’t just a single chatbot; it’s an AI research lab based in China, known for developing sophisticated AI models, including powerful large language models (LLMs) and specialized coding models like Deepseek Coder. Their models have demonstrated strong performance, sometimes rivalling established players like OpenAI’s ChatGPT and Anthropic’s Claude.

Key aspects include:

- Chinese Origins: Deepseek is a Chinese company, specifically a Chinese artificial intelligence research entity reportedly backed by private funding, including potentially a Chinese hedge fund. This origin is central to many privacy concerns.

- Advanced Capabilities: Their models excel in areas like code generation, translation, and complex reasoning tasks, making the Deepseek AI app attractive for developers and potentially for automating certain business processes.

- Accessibility: Deepseek offers access via an API and user-facing applications (like their chatbot), making it relatively easy to integrate or experiment with.

Is Deepseek Safe to Use? A Privacy Risk Breakdown

The answer to “is Deepseek safe to use” is complex and depends heavily on your specific use case, risk tolerance, and the sensitivity of the data involved. There isn’t a simple “yes” or “no.” Instead, decision-makers must evaluate several key risk factors.

General risks associated with many AI apps include:

- Data Collection: AI models learn from vast datasets and often collect user interaction data (queries, prompts, feedback) to improve performance.

- Data Storage: Where and how this collected data is stored raises security questions.

- Data Usage: How the company utilizes collected data (for training, analytics, etc.) is often opaque.

- Potential for Leaks: Like any digital platform, AI services are susceptible to breaches.

However, using Deepseek introduces specific considerations primarily linked to its origin.

Deep Dive into Deepseek Data Privacy and Security Concerns

When evaluating, is Deepseek is safe to use, several specific areas warrant close examination:

- Data Collection Practices:

- What specific user data does Deepseek collect? According to typical AI practices and likely outlined (though perhaps vaguely) in their Terms of Service, this could include prompts, generated responses, IP addresses, device information, and potentially account details if registration is required.

- The critical concern is whether personal information or sensitive data inadvertently entered into the AI chatbot (e.g., proprietary code, confidential business strategies, client information) is collected and retained.

- Data Storage and Jurisdiction:

- A primary concern is where data is stored. Given Deepseek is a Chinese company, it’s highly probable that data is stored on servers in China.

- This is significant because data stored in China falls under Chinese jurisdiction and laws, including the National Security Law, which can compel companies to provide data to state authorities upon request. This raises serious national security concerns for international users, particularly businesses.

- Transparency and Security Measures:

- How transparent is Deepseek about its data collection and usage policies? Often, privacy policies can be ambiguous.

- What cybersecurity measures are in place to protect user data from breaches or malicious actors? While Deepseek likely employs standard security practices, independent verification is difficult. Concerns were highlighted when researchers at Wiz (a cloud security company) identified potential vulnerabilities in some AI platforms (though specific, persistent vulnerabilities in Deepseek haven’t been widely confirmed in the same vein as some other apps, the potential exists across the AI industry).

- Potential for Censorship and Monitoring:

- There have been anecdotal reports and user tests suggesting that AI chatbots developed by Chinese companies, including potentially those like Deepseek, may censor outputs related to politically sensitive topics (e.g., Taiwan, the 1989 Tiananmen Square massacre). While this primarily affects content generation, it hints at underlying monitoring and filtering mechanisms potentially influenced by state directives.

The ‘China Factor’: Geopolitical Risks and National Security Implications

For C-suite executives and investors, the geopolitical context is paramount. Using technology developed by a Chinese artificial intelligence company involves navigating a complex landscape:

- Data Sovereignty: Data entered into Deepseek could be subject to access by the Chinese government, posing risks to intellectual property, trade secrets, and sensitive corporate or client information. This is a significant hurdle for industries with strict compliance and confidentiality requirements (e.g., finance, defense, healthcare).

- Regulatory Differences: China’s privacy laws differ significantly from GDPR (Europe) or CCPA (California). While China has implemented data protection laws (like PIPL), the state’s access rights often supersede individual or corporate privacy expectations held in Western jurisdictions.

- Supply Chain Risk: Relying heavily on a foreign AI provider, especially from a region with potential geopolitical friction, introduces strategic risk. Access could be restricted, or policies could change unexpectedly. This contrasts with using US tech companies like OpenAI or Anthropic, which operate under different legal and geopolitical frameworks.

Comparing Deepseek to Alternatives like ChatGPT and Claude

When considering is Deepseek is safe to use, comparing it to alternatives is useful:

- OpenAI (ChatGPT) & Anthropic (Claude): These are leading US tech companies. While not immune to security concerns or data privacy debates (e.g., questions around training data), they operate under US and EU legal frameworks. They offer enterprise tiers with stronger privacy commitments (e.g., not using API data for training). However, using their free or consumer versions still involves data collection.

- Jurisdiction: The key difference lies in jurisdiction and the potential for government access. Data handled by US companies is subject to US laws (like FISA 702), while data handled by Deepseek is subject to Chinese laws. The level of perceived risk often differs based on the user’s location and threat model.

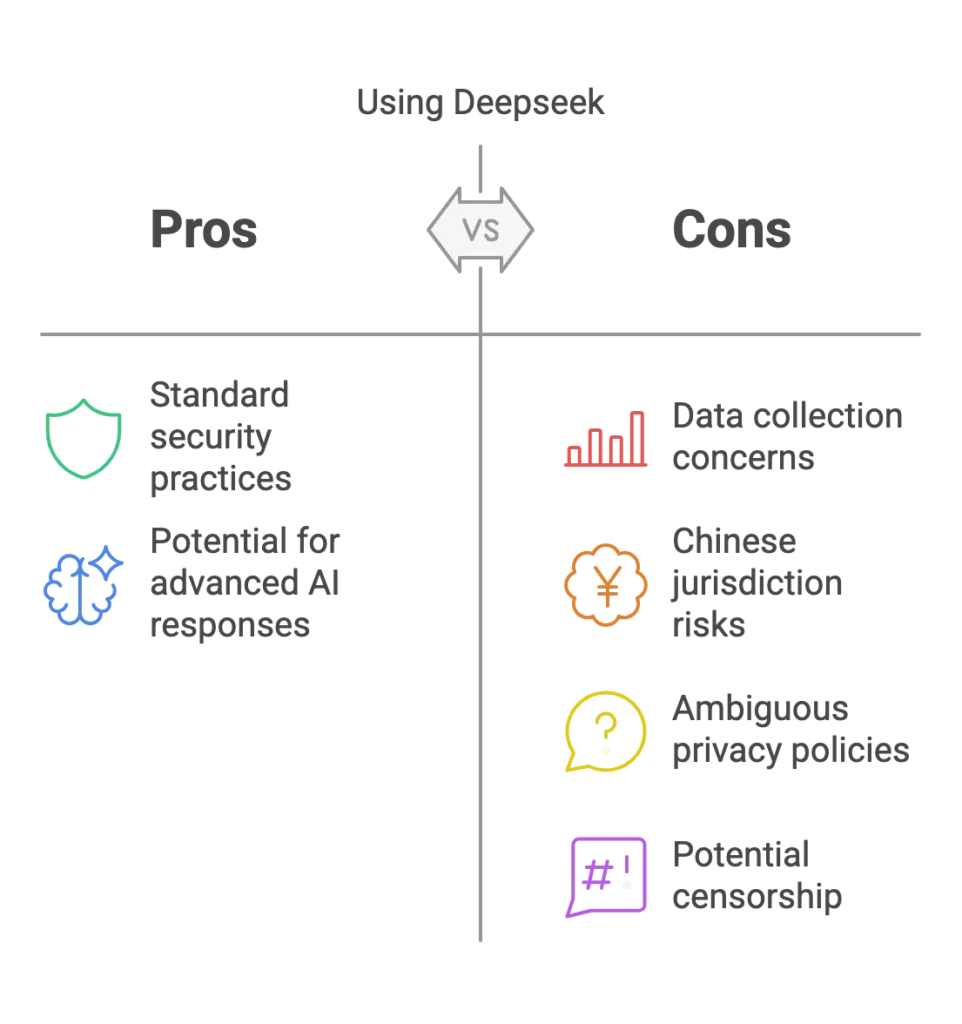

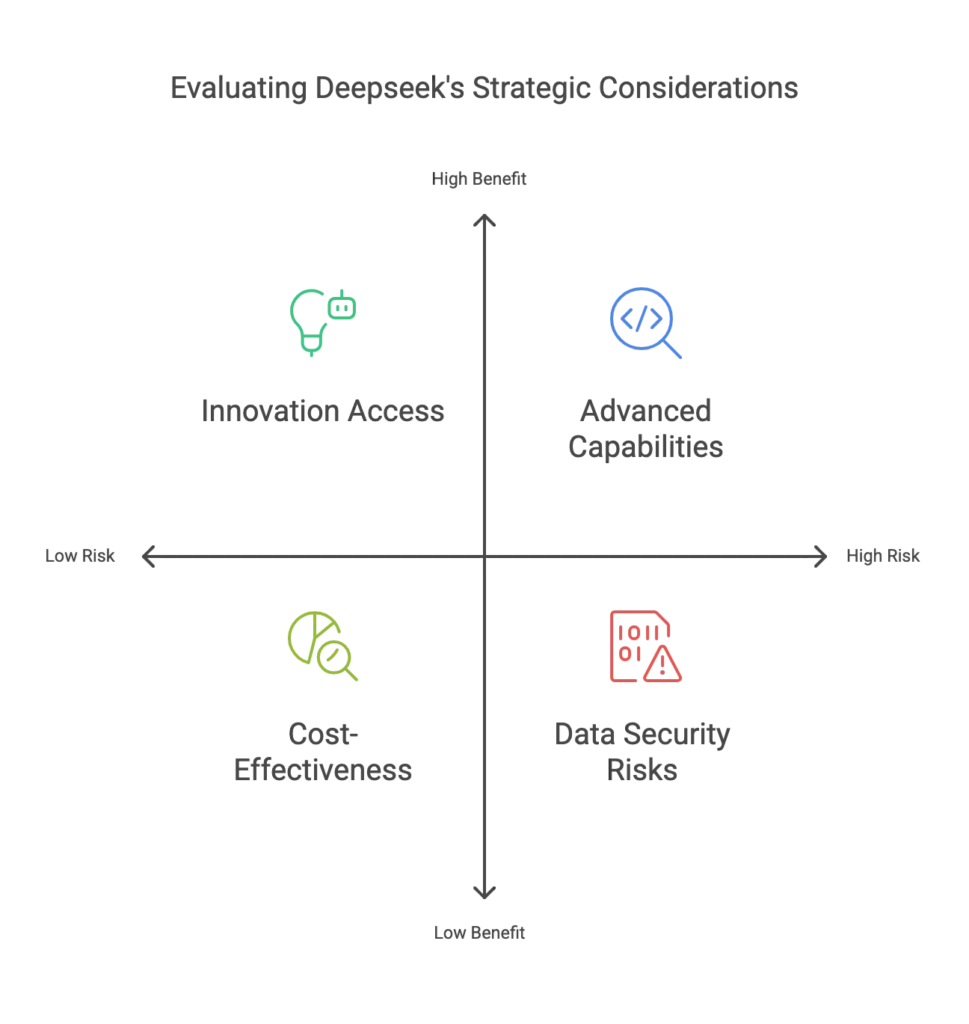

Potential Benefits vs. Risks for Finance Decision-Makers

- Potential Benefits:

- Advanced Capabilities: Deepseek models might offer superior performance in specific tasks (especially coding).

- Innovation Access: Experimenting with diverse AI tools can foster innovation.

- Cost: Depending on pricing models, Deepseek might offer a cost-effective alternative.

- Significant Risks:

- Data Security & Privacy: High risk, especially for sensitive information, due to potential state access and jurisdictional issues.

- IP Protection: Risk of proprietary information exposure.

- Compliance: Potential violation of data residency or privacy regulations (e.g., GDPR if EU citizen data is processed without adequate safeguards).

- Reputational Risk: Association with tools perceived as insecure or linked to state surveillance could damage brand reputation.

Practical Steps: How to Stay Safe When Using Deepseek

If you or your organization are considering is Deepseek safe to use, adopting a cautious, risk-mitigation approach is essential:

- Assume No Privacy for Sensitive Data: Never input confidential corporate information, client data, intellectual property, trade secrets, or sensitive personal data into the public version of Deepseek or any AI tool where you don’t have explicit, enterprise-grade privacy guarantees.

- Review Terms of Service and Privacy Policies: Understand what Deepseek says it collects and how it uses data, but read critically, acknowledging the legal framework it operates within.

- Use for Non-Sensitive Tasks Only: Limit usage to tasks involving publicly available information or non-proprietary activities (e.g., general knowledge queries, brainstorming non-sensitive ideas, working with open-source code).

- Implement Strict Internal Policies: Establish clear guidelines within your organization about acceptable AI tool usage, specifying which tools are approved for which types of data.

- Consider Sandboxing: For technical teams exploring capabilities, use isolated environments (sandboxes) that don’t connect to internal networks or sensitive databases.

- Stay Informed: Monitor news regarding Deepseek security posture, data breaches (Deepseek suffered any?), and evolving geopolitical tensions or regulations. Follow research from cybersecurity firms like Wiz.

- Prioritize Secure Alternatives: For tasks involving sensitive data, opt for enterprise-level AI solutions from providers offering robust data privacy agreements, clear jurisdictional standing (preferably aligned with your own), and strong security certifications (e.g., OpenAI Enterprise, Microsoft Azure OpenAI Service, Anthropic’s enterprise offerings).

Conclusion: A Calculated Risk Requiring Strategic Oversight

So, is Deepseek safe to use? For high-level decision-makers, investors, and HNWIs, the answer leans towards significant caution. While Deepseek AI capabilities are noteworthy, the privacy risks, data security uncertainties, and geopolitical implications associated with its Chinese origins present substantial challenges, particularly when using data that is sensitive or proprietary.

The potential efficiency gains must be rigorously weighed against the potential for data exposure, IP loss, and compliance violations. For non-sensitive, public-facing tasks, the risk might be manageable with careful usage. However, for core business operations, strategic planning, client data management, or handling any form of confidential information, the current risk profile associated with using Deepseek appears unacceptably high for most Western organisations operating under stringent regulatory and security expectations.

Next Steps

Navigating the complex intersection of artificial intelligence, risk management, and geopolitics requires expert guidance. Before integrating any new AI tool like Deepseek into your operations or investment strategy, ensure you have conducted thorough due diligence and risk assessment.

Are you evaluating AI tools for your business or portfolio? Contact us today for a strategic consultation on managing AI risks, ensuring data privacy, and leveraging artificial intelligence safely and effectively within your specific financial and operational context. We can help you develop robust governance frameworks and make informed decisions that protect your assets and maintain your competitive edge in the age of AI.