AI Fraud Detection in Finance: Spotting the Top 10 Deepfake Threats in 2025

The democratisation of generative AI tools has dramatically lowered the barrier to entry for creating high fidelity deepfakes. What once required significant computing power and expertise can now be achieved with relative ease, enabling fraudsters to execute complex scams at scale. Traditional AI fraud detection methods, often reliant on spotting anomalies in data patterns, can struggle against attacks that leverage seemingly legitimate, albeit fabricated, human interactions.

The core issue lies in the erosion of trust in digital communication. When a CEO’s voice can be perfectly cloned for a fraudulent wire transfer request, credit card fraud or an investment analyst’s video manipulated to spread false market information, the very foundations of secure financial operations are threatened. This necessitates a paradigm shift in AI fraud detection and prevention. We need advanced AI fraud detection systems that don’t just analyse transaction data but can also scrutinise the authenticity of audio-visual communications. Machine learning models must be trained not only to detect fraud patterns but also to identify the subtle digital fingerprints left by AI generation.

The New Frontier: Deepfakes and AI Fraud Detection in Finance

In the rapidly evolving landscape of financial services, the integration of Artificial Intelligence has unlocked unprecedented efficiencies and insights. However, this same technological advancement has inadvertently armed fraudsters with powerful new weapons. Among the most alarming is deepfake technology, AI-generated synthetic media so convincing it can deceive even discerning eyes and ears.

For C-suite executives, investors, and HNWIs, understanding and mitigating the risks posed by deepfakes isn’t just prudent; it’s critical for safeguarding assets, maintaining market integrity, and preserving hard earned reputations. The rise of generative AI means deepfake scams in banking and finance are no longer theoretical threats; they are active, sophisticated attacks causing significant financial loss. Effective AI fraud detection in finance must now explicitly address this burgeoning challenge. This post delves into the Top 10 deepfake threats currently menacing the financial sector and outlines actionable strategies for robust fraud prevention.

Top 10 Deepfake Threats Targeting Financial Services (and How to Fortify Your Defenses)

The sophistication of deepfake technology in financial services presents multifaceted risks. Here are ten critical threats demanding immediate attention and robust mitigation plans, crucial for anyone involved in high level financial decision making or investment:

1. Executive Impersonation Scams (The “CEO Fraud” Reimagined)

- The Threat: Voice & Video Clones Demanding Urgent Action

AI and machine learning is now being used to clone the voice and even video of senior executives. Fraudsters use these deepfakes to trick finance staff into making urgent payments or sharing sensitive data. It’s a more advanced spin on traditional Business Email Compromise (BEC). To counter it, companies are starting to use anomaly detection tools that flag unusual speech, facial cues, or behaviour that doesn’t quite add up. - Mitigation Strategy: Layered Verification & Human Oversight

- Multi-Factor Authentication (MFA): Implement MFA not just for system access, but conceptually for high value transaction approvals.

- Out-of-Band Verification: Establish strict protocols requiring verification through a different, pre established communication channel (e.g., a direct phone call to a known number, a secure internal messaging app) for any unusual or high value financial requests, regardless of apparent source.

- Keyword Protocols: Implement challenge questions or code words for verbal verification of sensitive requests.

- Employee Training: Continuously educate finance teams about the existence and realism of deepfake scams in banking and finance, emphasising adherence to verification protocols even under pressure. This is key to preventing AI fraud in finance.

2. Fabricated Earnings Calls and Investor Communications

- The Threat: Manipulating Markets with Synthetic Realities

Deepfakes can be used to create entirely fabricated audio or video recordings of earnings calls, investor updates, or executive interviews. These fakes might announce false positive results to inflate stock prices before a dump, or spread negative news to enable short selling or damage competitors. This directly impacts investors and market stability. - Mitigation Strategy: Multi-Channel Verification & Stakeholder Education

- Official Channels Only: Direct stakeholders to rely exclusively on official, secured investor relations portals and verified company communication channels for critical information.

- Cross-Verification: Encourage cross-referencing information across multiple reputable financial news sources before acting.

- Watermarking/Digital Signatures: Explore technologies for digitally signing or watermarking official video/audio releases to prove authenticity.

- Investor Education: Proactively inform investors and analysts about the potential for deepfake manipulation and how to verify official communications. Effective financial deepfake detection awareness is crucial.

3. M&A Manipulation & Sabotage

- The Threat: Deepfakes Derailing High-Stakes Deals

During sensitive Merger & Acquisition (M&A) negotiations, deepfakes can simulate internal communications (e.g., a fake video call between executives discussing withdrawing from the deal) to mislead the other party, sow discord, or leak manipulated information to sabotage the process. Competitors could also use deepfakes to spread false rumours about a target company’s stability. - Mitigation Strategy: Secure Channels & Enhanced Due Diligence

- Secure Communication Platforms: Utilize end-] to end encrypted, verified communication channels for all sensitive M&A discussions. Avoid relying solely on standard video conferencing without additional identity verification.

- Rigorous Verification: Implement strict protocols for verifying the identity of participants in crucial meetings and the authenticity of shared documents.

- Information Control: Limit the dissemination of sensitive negotiation details and monitor for leaks or suspicious communications. An effective AI fraud detection strategy must encompass communication security.

4. Reputation Attacks on Key Individuals & Institutions

- The Threat: Weaponized Deepfakes Tarnishing Trust

Malicious actors can create deepfake videos or audio recordings depicting executives, board members, or even prominent investors engaging in scandalous, illegal, or unethical behaviour. Even if quickly debunked, the initial reputational damage can be severe, impacting stock prices, investor confidence, and personal careers. - Mitigation Strategy: Proactive Monitoring & Crisis Response Planning

- Digital Footprint Monitoring: Employ services that actively monitor online platforms (including social media and the dark web) for mentions and potentially malicious synthetic media targeting key personnel and the institution. AI detection tools can assist here.

- Pre-Prepared Crisis Communications: Develop a robust crisis management plan specifically addressing deepfake attacks, including swift debunking strategies and communication protocols with stakeholders, media, and regulators.

- Legal Recourse: Be prepared to pursue legal action against perpetrators when identified.

5. Compromised Investor Due Diligence

- The Threat: Illusionary Teams & Fabricated Performance

Startups seeking funding or fund managers pitching to LPs (Limited Partners) could potentially use deepfakes to create convincing video presentations featuring fake team members with impressive (but fabricated) credentials, or showcase misleading product demonstrations or customer testimonials. This undermines the core of investor due diligence, particularly relevant for Venture Capital and Private Equity. - Mitigation Strategy: Rigorous Identity Verification & In-Person Validation

- In-Person/Trusted Video Meetings: Whenever possible, insist on in-person meetings or video calls via highly secure, trusted platforms for key personnel introductions and critical discussions.

- Thorough Background Checks: Conduct comprehensive background checks on key management and team members, verifying credentials through independent sources.

- Cross-Reference Claims: Independently verify claims about customers, partnerships, and performance data. Don’t rely solely on provided materials. This requires robust financial deepfake detection awareness during due diligence.

6. Fraudulent KYC and Onboarding Processes

- The Threat: Synthetic Identities Bypassing Security Gates

Generative AI can create highly realistic, unique “synthetic” faces and identity document templates. Fraudsters use these to bypass Know Your Customer (KYC) and Anti-Money Laundering (AML) checks during online account opening processes, creating mule accounts for money laundering or accessing financial services fraudulently. This is a direct challenge to compliance and enables synthetic identity fraud. - Mitigation Strategy: Advanced Biometrics & AI-Powered Liveness Detection

- Multi-Modal Biometrics: Implement KYC processes that rely on multiple biometric factors (e.g., face, fingerprint, voice) where feasible.

- Active Liveness Detection: Utilize sophisticated liveness detection technologies during video onboarding that can distinguish between a live person and a presentation attack (e.g., holding up a photo or video, or using a deepfake). AI fraud detection systems are critical here.

- AI Document Verification: Employ AI tools capable of detecting subtle inconsistencies and artifacts common in forged or AI-generated identity documents. AI is used in fraud detection effectively in this domain.

7. Impersonation of Compliance Officers & Internal Authorities

- The Threat: Exploiting Trust from Within

Similar to executive impersonation, fraudsters might use deepfakes to mimic internal auditors, compliance officers, or IT administrators. They could then instruct employees to bypass certain controls, grant unauthorised system access, or approve illicit transactions under the guise of legitimate authority or testing procedures. - Mitigation Strategy: Strict Internal Protocols & Employee Training

- Internal Verification Codes: Implement secure internal codes or challenge-response systems for validating instructions from compliance or authority figures, especially for sensitive actions.

- Segregation of Duties & Approvals: Reinforce policies requiring multi-person approvals for critical actions, making it harder for a single point of compromise.

- Targeted Training: Educate employees specifically about the risk of internal impersonation via deepfakes and the importance of adhering to verification protocols without exception. This is a core part of fraud prevention strategy.

8. Market Manipulation via Fake Financial News & Analysis

- The Threat: AI-Generated “Experts” Spreading Disinformation

Deepfake videos can be created showing well known financial analysts, economists, or influential investors making false predictions, endorsing dubious assets (like pump-and-dump schemes), or spreading panic-inducing news to manipulate market sentiment for the fraudster’s gain. This disinformation can spread rapidly through social media. - Mitigation Strategy: Source Verification & Cross-Referencing

- Rely on Reputable Sources: Train teams and advise clients to rely on established, credible financial news outlets and official sources rather than unverified social media clips or forums.

- Fact-Checking Tools: Utilise and promote the use of fact checking services and platforms.

- Critical Assessment: Foster a culture of critical thinking and skepticism towards sensational or uncorroborated financial “news,” especially if it urges immediate action. Detecting fraud here involves media literacy.

9. Deceptive Private Equity Exits & Valuations

- The Threat: Inflating Value with Fabricated Evidence

When a company is being prepared for sale or IPO, particularly in private equity scenarios, there’s a risk (though complex to execute) that deepfakes could be used subtly. Examples include creating fake video testimonials from non-existent “major” clients or manipulating video footage of operations to suggest higher efficiency or capacity, thereby artificially inflating the company’s valuation. - Mitigation Strategy: Independent Audits & Third-Party Verification

- Rigorous Independent Audits: Mandate thorough, independent financial and operational audits conducted by reputable third-party firms.

- Direct Customer/Partner Verification: As part of due diligence, insist on direct contact and verification with key customers, suppliers, and partners cited in performance claims.

- On-Site Inspections: Conduct physical site visits and inspections to verify operational claims where applicable.

10. Sophisticated Insurance Fraud (Luxury Assets & Beyond)

- The Threat: Deepfakes Falsifying Claims & Ownership

In high-value insurance claims (relevant to HNWIs and Luxury Asset Finance), deepfakes could be used to falsify evidence. This might involve creating fake video documentation of an accident or theft, altering images or videos to exaggerate damage, or even faking proof of ownership for non existent or already sold luxury assets (art, classic cars, jewlery). - Mitigation Strategy: Advanced Verification & Physical Assessment Requirements

- Forensic Media Analysis: Employ advanced AI fraud detection tools capable of analysing submitted photo/video evidence for signs of manipulation or AI generation.

- Mandatory Physical Inspections: For high value claims, require mandatory inperson inspections and assessments by qualified, trusted adjusters or appraisers.

- Blockchain/Secure Registries: Explore the use of secure digital registries (potentially blockchain-based) for verifying ownership of high value assets.

Beyond Detection: Building a Resilient AI Fraud Detection Strategy in Finance

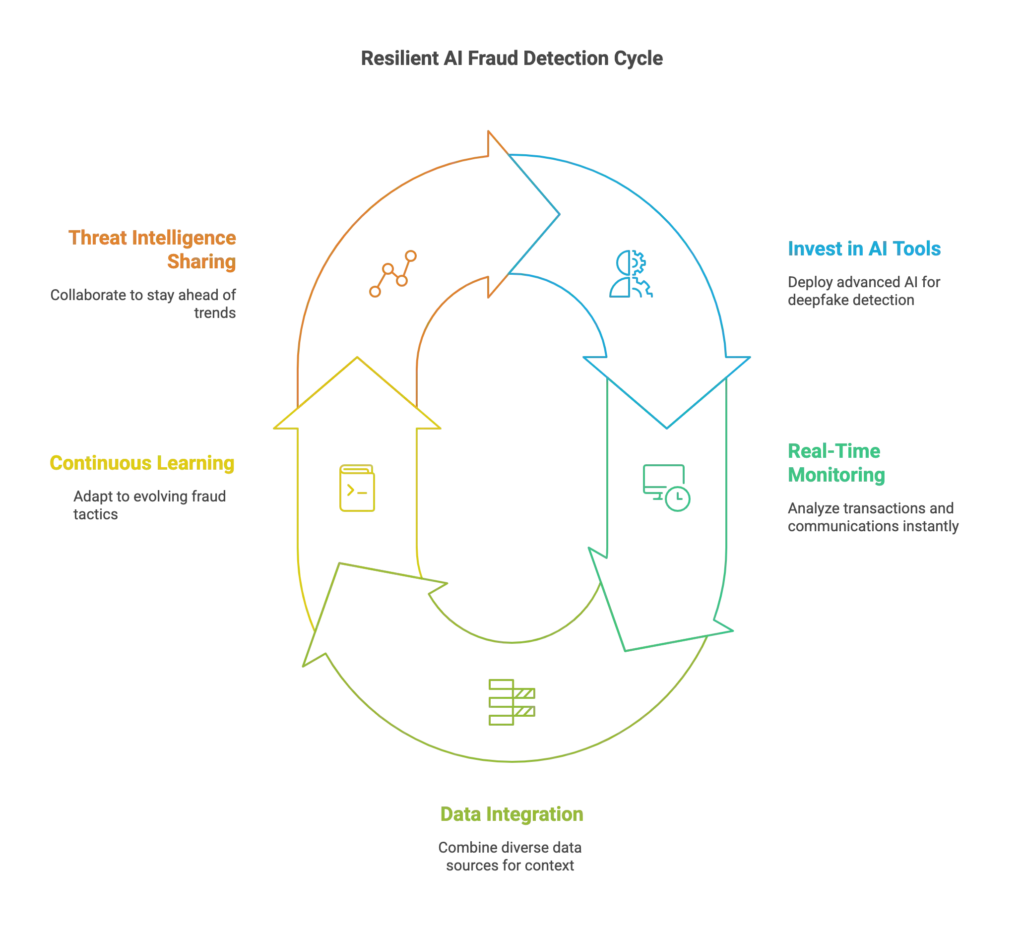

Addressing deepfake threats requires more than just point solutions; it demands a holistic and adaptive AI fraud detection strategy. Key components include:

- Investing in Advanced AI Tools: Deploying sophisticated AI and machine learning models specifically trained for financial deepfake detection across various media types (audio, video, image). These detection models need continuous learning capabilities.

- Real-Time Monitoring: Implementing real time fraud detection systems that can analyse communications and transactions as they happen, flagging suspicious activities instantly. Achieving real-time fraud detection by 10% improvement (or more) can yield significant savings.

- Data Integration: Breaking down data silos to provide AI models with a richer context for identifying anomalies and fraud patterns. This includes transaction data, communication metadata, and even behavioral biometrics.

- Continuous Learning & Adaptation: Recognising that fraud tactics constantly evolve, especially with generative AI fraud. Your AI fraud detection systems must be designed for continuously learning from new data and adapting to new fraud techniques, as AI can make mistakes. AI models learn best with diverse and current data.

- Threat Intelligence Sharing: Participating in industry consortiums and information sharing groups to stay ahead of emerging fraud trends and fraudster methodologies.

The benefits of AI in this fight are clear: speed, scale, and the ability to detect signs of fraud invisible to human analysis. Leading financial institutions are increasingly using AI to improve fraud detection accuracy and combat transaction fraud. While challenges of AI exist (e.g., data privacy, model bias, adversarial attacks), the threat of fraud necessitates embracing these powerful technologies. Investing in AI fraud detection and prevention is an investment in the stability and trustworthiness of your operations.

The Human Element: Why Technology Alone Isn’t Enough

While AI fraud detection tools are essential, they are most effective when complementing human expertise and vigilance. AI should be one component of a broader fraud prevention strategy.

- Employee Training: Regular, targeted training is paramount. Employees are the first line of defense and must be equipped to recognize potential deepfake attempts and, crucially, adhere strictly to verification protocols.

- Critical Thinking Culture: Foster a workplace culture where questioning unusual requests, even those seemingly from authority figures, is encouraged and rewarded.

- Clear Escalation Paths: Ensure employees know exactly how and to whom they should report suspected deepfake attempts or other fraudulent activities.

- Ethical Oversight: Implement strong governance around the use of AI in fraud detection to ensure fairness, transparency, and compliance with regulations.

Conclusion: Securing the Future of Finance Against Deepfake Threats

Deepfake technology represents a paradigm shift in the landscape of financial crime. The potential for sophisticated impersonation, market manipulation, and large scale fraudulent activities demands immediate and strategic action from leaders across the financial sector. Effective AI fraud detection in finance is no longer just about analysing spreadsheets and transaction logs; it must now encompass the complex challenge of verifying digital identities and communications in an era of powerful generative AI.

By understanding the specific threats outlined above, from executive impersonation to fraudulent KYC and market manipulation, and implementing robust, multi-layered mitigation strategies that combine cutting edge AI technologies with rigorous processes and vigilant human oversight, financial institutions can significantly bolster their defenses.

Preventing AI fraud in finance, particularly deepfake-driven attacks, is crucial for maintaining client trust, protecting assets, ensuring market stability, and safeguarding institutional reputation. The fight against fraud requires continuous adaptation and investment.

What Next?

The era of deepfake threats is here. As decision-makers and stewards of capital, the time to act is now. We urge you to:

- Review and Update Your Security Protocols: Assess your current AI fraud detection strategies specifically for vulnerabilities to deepfake attacks across all communication channels and processes (onboarding, transactions, internal comms).

- Invest in Advanced AI Fraud Detection Systems: Explore and deploy state of the art AI fraud detection tools equipped for financial deepfake detection and continuous learning. Partner with leading technology providers in the AI in fraud prevention space.

- Prioritize Employee Education: Implement comprehensive training programs to raise awareness about deepfake risks and reinforce adherence to verification procedures.

- Stay Informed: Continuously monitor the evolution of deepfake technology in financial services and adapt your defenses accordingly.

To stay ahead of these threats, financial institutions must integrate AI-driven verification tools and build fraud resilience into their digital DNA. Waiting until an incident occurs is no longer an option.

At Forbes Le Brock work with clients across UK, Europe & Asia-Pacific, Contact Us Today to discuss your AI fraud detection options.

Rather listen? Catch our Deep Dive into AI Fraud Detection in Finance: 10 Deepfakes to Watch in 2025 HERE ▶️