Unmasking the Deepfake CFO Scam: When the CFO Wasn’t Real

In finance, trust and verification are paramount. But what happens when the person you’re speaking to, whether your trusted colleague or even your CEO, isn’t real?

This is the chilling reality of the Deepfake CFO Scam, a fraud that cost a Hong Kong firm over US$25 million. Powered by AI, deepfakes are a growing threat in finance, bypassing traditional verification methods and creating new risks for C-suite executives, investors, and HNWIs.

The Alarming Reality of the Deepfake CFO Scam: A $25 Million Wake Up Call from Hong Kong

Deepfakes, AI-generated videos that mimic a person’s likeness or voice, have evolved from harmless entertainment into a serious tool for AI financial fraud. In the Hong Kong based Arup deepfake case, an employee received a phishing email from the company’s UK Chief Financial Officer. The employee was invited to a video call where the CFO and other senior colleagues appeared to give urgent transfer instructions. Believing the call was legitimate, the employee approved HK$200 million (approx. $25.6 million USD) in transfers, which were dispersed over several transactions.

It wasn’t until later that the employee realised the entire call had been a deepfake, a digital replica of the real CFO and executives. The fraudsters had used artificial intelligence to create convincing video and voice replicas, bypassing standard security checks and exploiting trust.

This wasn’t an isolated case, several other deepfake incidents have surfaced across the finance sector, so we have to know what to look for.

Deconstructing the Arup Deepfake CFO Scam: How AI Driven Fraudsters Executed a Multi-Million Dollar Heist

In early 2024, a finance worker at Arup, a multinational engineering firm, received a phishing email claiming to be from the UK based CFO, requesting a confidential transaction. The employee, initially skeptical, joined a video conference call to confirm the details.

During the call, the employee saw familiar faces, Arup’s financial officer and senior colleagues—who sounded and looked just like the real executives. They urgently pushed for financial transfers, citing confidentiality. Trusting the legitimacy of the video call, the employee approved HK$200 million (around $25.6 million USD) in transfers across multiple transactions and bank accounts.

The truth? Every participant in the video call, except the employee, was a deepfake. Fraudsters had used ai generated tools to create digital replicas of Arup executives, likely training their models on publicly available footage. This technology exploited trust, urgency, and authority to trick the employee into bypassing normal procedures. By the time the deception was discovered, 25 million had already been transferred. Hong Kong police launched an investigation, highlighting the groundbreaking nature of this AI driven fraud.

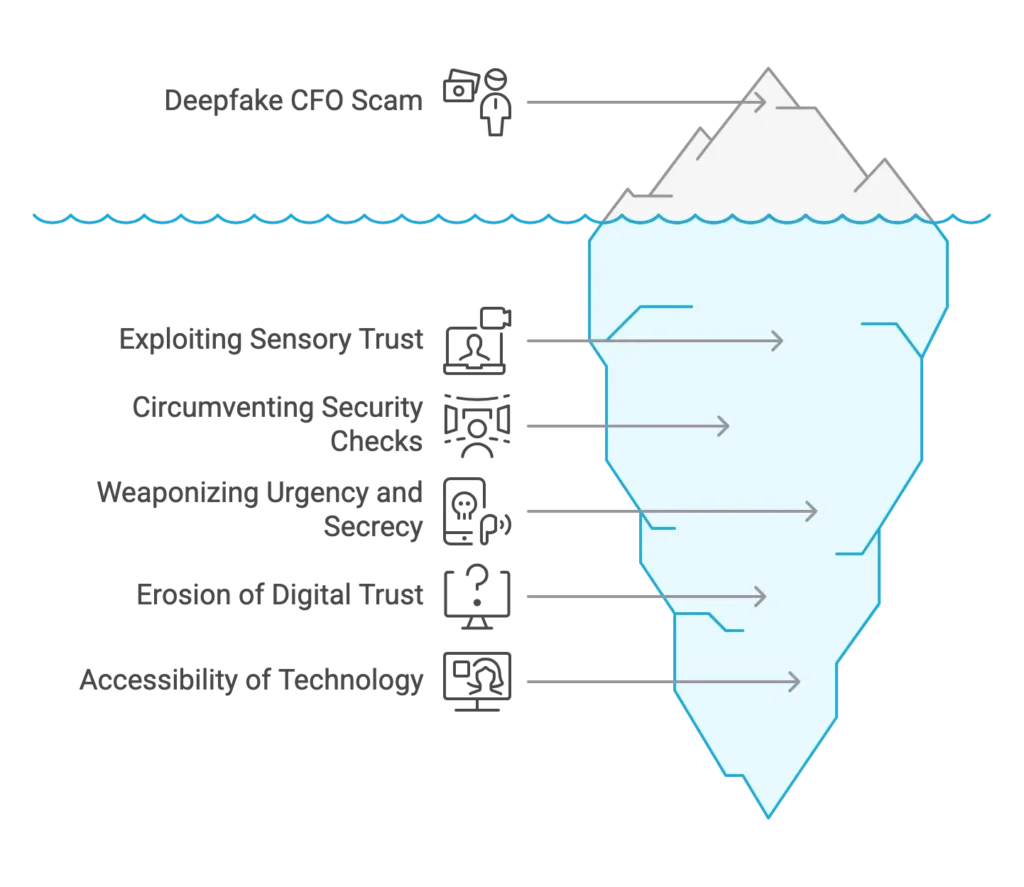

The Unique Danger: Why Deepfakes Bypass Traditional Security Measures

The Deepfake CFO Scam represents a new form of financial fraud, powered by AI. It’s one of many recent examples highlighting the risks of AI mistakes in high stakes financial environments. Here’s why it’s so dangerous:

- Exploiting Sensory Trust: Video calls feel more legitimate than emails or phone calls. Seeing a familiar face, especially a senior exec, increases the psychological pressure to comply.

- Circumventing Standard Checks: Deepfakes bypass voice and visual confirmation methods, rendering them less effective unless combined with other security measures.

- Weaponising Urgency: Scammers create a sense of urgency and secrecy, forcing quick, unverified action.

- Erosion of Digital Trust: As deepfakes become more common, the trust we place in digital communications is diminishing. Companies must implement additional verification layers.

- Accessibility of Technology: Creating deepfakes no longer requires significant computing power. Generative AI tools make it easier for criminals to produce realistic frauds.

Beyond the Deepfake CFO Scam: Real Risks in High-Stakes Finance

While the Arup case focused on a deepfake Head of Finance, these tactics can target various sectors:

- Private Credit & Lending: Fraudsters could impersonate key personnel to approve significant loan draws or covenant waivers via deepfake video calls.

- Commercial Real Estate Finance: Deepfake video calls could alter wire instructions during the closing process, redirecting millions.

- Luxury Asset Finance: Scammers could impersonate clients, authorizing fraudulent payments for asset transfers, citing “urgent maintenance” needs.

- Securities-Based Lending: Deepfakes could be used to approve asset liquidation or loan approvals, targeting high value portfolio assets.

- Investor Relations & HNWI Communications: Fraudsters may impersonate trusted advisors to request urgent capital transfers or manipulate stock prices.

These aren’t hypothetical scenarios. High-value transactions, digital communication reliance, and exploitation of trust make these sectors prime targets. Vigilance is critical.

Factors Fuelling the Surge in AI-Driven Financial Fraud

The rise of AI-driven attacks like the Hong Kong deepfake fraud is part of a larger trend. Several factors are driving this surge:

- Advancements in AI: Deepfake technology is improving rapidly, making fake voices and images harder to detect.

- Remote Work: With more video calls in remote and hybrid work setups, opportunities for deepfakes to slip through have increased.

- Data Availability: Publicly available content from social media and corporate sites provides fraudsters with ample material to create convincing deepfakes.

- Cybercriminal Sophistication: Organised cybercrime groups are using AI to complement traditional tactics, becoming more patient and strategic in their attacks.

- Security Gaps: Many organisations still rely on outdated verification methods like voice and video ID, which are no longer reliable against deepfakes.

These factors combine to make the financial sector a growing target for AI-driven fraud.

Proactive Measures Against Deepfake CFO Scams

| Measure | Action | Purpose |

|---|---|---|

| Multi-Channel Verification | Confirm transactions through phone, secure messaging, or in-person checks. | Prevent fraud from relying on one channel (e.g., video). |

| Employee Training | Train staff on recognising deepfake red flags like urgency, visual/audio inconsistencies, and irregularities. | Empower employees to spot and report suspicious activity. |

| AI Detection Tools | Invest in AI tools to analyse media for deepfake artifacts, but don’t rely solely on them. | Aid in identifying fake media while maintaining other safeguards. |

| Update Security Protocols | Regular audits of transaction approval and communication security. | Ensure protocols are resilient to evolving AI threats. |

| Secure Communication Channels | Use encrypted platforms for sensitive communications and verify through trusted channels. | Protect against fraudulent requests initiated by email or messaging. |

| Incident Response Plan | Develop a clear plan for reporting fraud and attempting fund recovery. | Enable a swift, coordinated response to minimise damage. |

Conclusion: Protecting Your Assets in an AI-Driven World

The $25 million Deepfake CFO Scam in Hong Kong highlights a growing threat: AI powered fraud. As technology advances, fraudsters have more tools at their disposal, making traditional security measures ineffective.

For decision makers and investors, the key takeaway is clear, complacency is dangerous. To protect your business and assets, you need proactive, multi layered security strategies, including technology, processes, and employee training. Defending against deepfake fraud is no longer optional; it’s a strategic necessity to stay ahead of rapidly evolving threats.

How to Respond to a Deepfake CFO Scam

The rise of AI-driven fraud, such as the Deepfake CFO scam, makes enhanced security essential for high value financial transactions. If you handle asset-based finance, real estate, or large portfolios, it is crucial to act now.

Are your verification processes robust enough to guard against sophisticated deepfake attacks?

Speak to our experts for a confidential consultation. With deep experience securing complex deals in luxury asset finance, securities-based lending, and commercial real estate, we can help you implement effective strategies to protect your business and assets from these evolving threats.

Contact us today to strengthen your defences against deepfake fraud like the Deepfake CFO scam and other financial risks. We proudly serve clients across the UK, Europe, and Asia-Pacific.

FAQs

1. What is a deepfake CFO scam?

A deepfake CFO scam is a type of financial fraud where criminals use AI-generated video or voice to impersonate a company’s Chief Financial Officer. The goal is often to trick employees into authorising fraudulent transfers or disclosing sensitive information.

2. How do fraudsters create deepfake CFO videos or calls?

They use AI tools trained on publicly available video or audio of the real CFO. These tools can generate convincing real-time video or voice replicas, making it difficult for staff to spot the deception.

3. Who is most at risk from deepfake financial scams?

Firms that handle large financial transactions, particularly in finance, real estate, and securities based lending. Executives and staff in finance or treasury departments are primary targets.

4. Can standard security checks stop deepfake scams?

Often, no. Traditional checks like email confirmation or voice recognition are not enough. These scams exploit trust and urgency, so layered security protocols and human verification steps are essential.

5. What steps can companies take to prevent a deepfake CFO scam?

Introduce strict internal controls for authorising payments, require multi-person approval, train staff to spot social engineering tactics, and use advanced fraud detection tools that flag unusual activity or changes in communication patterns.

🎙️ Prefer an audio summary? Check out the podcast discussion for a deeper dive into the blog post’s content. 🎧